Generative Reality Lab

The Generative Reality Lab, lead by Prof. Hao Li, works in Computer Vision, Graphics, and Machine Learning, where his team develops generative AI technologies for digital humans, virtual teleportation, world capture, media synthesis, and visual effects.

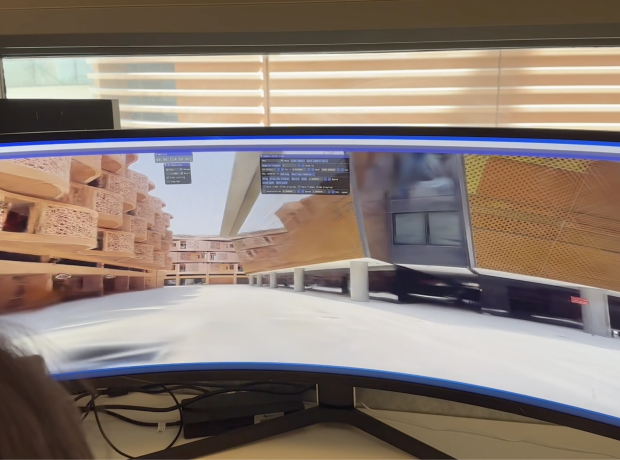

Meta Wall

A multi-purpose 270 LED space that is built for immersive interactive rendering, data visualization, virtual production, and volumetric capture with controlled lighting, making complex information more accessible and engaging. Allows us explore blending physical and digital elements. This makes the MetaWall a versatile tool in various fields, from education to entertainment.

Images, Video, & 3D Content Generation

The goal is to revolutionize the process of digital content creation, aiming for heightened realism and interactivity within virtual environments. This research is pivotal in enhancing the visual fabric of the metaverse and holds vast potential for applications in entertainment, education, and virtual reality experiences.

Avatars & Immersive Communication

This study aims to bridge the gap between physical and digital interaction by creating avatars that accurately mimic human expressions and gestures, thereby enriching the sense of presence and engagement in virtual spaces. This work not only advances technology but also explores the social and psychological dynamics of interaction in immersive digital worlds.

World Capture & Virtual Teleportation

This initiative focuses on harnessing advanced technologies to digitally map and replicate real-world environments, enabling users to virtually ‘teleport’ to these spaces. By creating highly detailed and accurate virtual renditions of physical locations, this project seeks to dissolve the barriers between reality and the digital realm.